Articles

- Page Path

- HOME > J Educ Eval Health Prof > Volume 20; 2023 > Article

-

Research article

Development and validation of the student ratings in clinical teaching scale in Australia: a methodological study -

Pin-Hsiang Huang1,2,3

, Anthony John O’Sullivan4,5

, Anthony John O’Sullivan4,5 , Boaz Shulruf2,6*

, Boaz Shulruf2,6*

-

DOI: https://doi.org/10.3352/jeehp.2023.20.26

Published online: September 5, 2023

1Department of Medical Humanities and Education, College of Medicine, National Yang Ming Chiao Tung University, Taipei, Taiwan

2Office of Medical Education, Faculty of Medicine and Health, The University of New South Wales Sydney, Sydney, Australia

3Division of Infectious Disease, Department of Medicine, Taipei Veterans General Hospital, Taipei, Taiwan

4Faculty of Medicine and Health, The University of New South Wales Sydney, Sydney, Australia

5Department of Endocrinology, St George Hospital, Sydney, Australia

6Centre for Medical and Health Sciences Education, University of Auckland, Auckland, New Zealand

- *Corresponding email: b.shulruf@unsw.edu.au

© 2023 Korea Health Personnel Licensing Examination Institute

This is an open-access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

- 924 Views

- 113 Download

Abstract

-

Purpose

- This study aimed to devise a valid measurement for assessing clinical students’ perceptions of teaching practices.

-

Methods

- A new tool was developed based on a meta-analysis encompassing effective clinical teaching-learning factors. Seventy-nine items were generated using a frequency (never to always) scale. The tool was applied to the University of New South Wales year 2, 3, and 6 medical students. Exploratory and confirmatory factor analysis (exploratory factor analysis [EFA] and confirmatory factor analysis [CFA], respectively) were conducted to establish the tool’s construct validity and goodness of fit, and Cronbach’s α was used for reliability.

-

Results

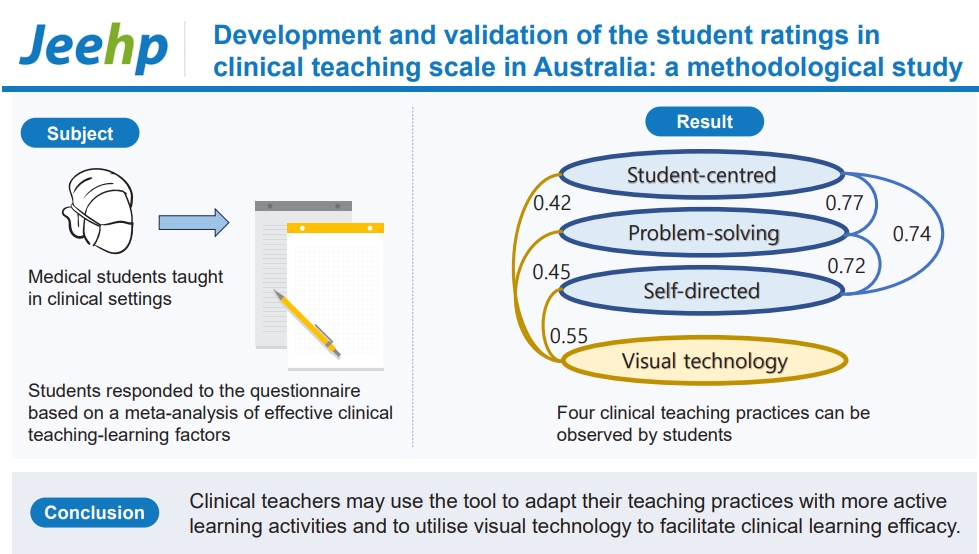

- In total, 352 students (44.2%) completed the questionnaire. The EFA identified student-centered learning, problem-solving learning, self-directed learning, and visual technology (reliability, 0.77 to 0.89). CFA showed acceptable goodness of fit (chi-square P<0.01, comparative fit index=0.930 and Tucker-Lewis index=0.917, root mean square error of approximation=0.069, standardized root mean square residual=0.06).

-

Conclusion

- The established tool—Student Ratings in Clinical Teaching (STRICT)—is a valid and reliable tool that demonstrates how students perceive clinical teaching efficacy. STRICT measures the frequency of teaching practices to mitigate the biases of acquiescence and social desirability. Clinical teachers may use the tool to adapt their teaching practices with more active learning activities and to utilize visual technology to facilitate clinical learning efficacy. Clinical educators may apply STRICT to assess how these teaching practices are implemented in current clinical settings.

- Background/rationale

- Measuring clinical teaching efficacy relies on student ratings, and most universities use these to evaluate teaching. Student ratings are often sought at the completion of teaching activities using standardized rating forms. However, many clinical teaching measurements by student ratings fail to cover all aspects of clinical teaching methodology, and the instruments used often have limited evidence of validity [1]. Moreover, there is no consensus as to which practice is most effective. A recent meta-analysis investigated the effectiveness of teaching-learning factors (TLFs) in clinical education and provided a comprehensive overview of the relative effectiveness of different clinical teaching methods [2]. The resulting list of effective TLFs is now available for clinical educators as a useful source.

- Commonly, evaluation scale anchors allow participants to report the extent to which they agree with each given statement (e.g., 5-point Likert scale from “strongly disagree” to “strongly agree”). However, agreement scales may reflect respondents’ attitudes towards behaviors instead of reporting their real experience or effectiveness of the behavior. Both “social desirability,” a tendency to present oneself positively, and “acquiescence,” a passive endorsement of an assertive statement despite the descriptions, may result in respondents answering with positive agreement because students may be polite, empathize with the teacher, and wish to avoid supplying a negative response [1]. Conversely, when applying frequency scales, respondents focus on the behaviors or incidents and recall how often they occur [3]. Frequency scales are considered minimally biased because they are less likely to assess respondents’ attitudes or the intensity of their perceptions [3]. Therefore, in comparison to agreement scales, frequency scales may minimize or alleviate “acquiescence” or “social desirability” effects.

- Identifying effective clinical teaching methods and applying robust methods of tool development and evaluation provide the opportunity to develop a reliable and valid tool to measure clinical teaching practices [2].

- Objectives

- The current study aims to appraise the reliability and validity of the newly introduced Student Ratings in Clinical Teaching (STRICT) scale used to evaluate clinical teaching practices.

Introduction

- Ethics statement

- Ethics approval number HC180496 was applied to medical students studying at the University of New South Wales in 2018. Approval was granted by the University of New South Wales review panels (HREAPG: Health, Medical, Community and Social). Informed consent was obtained from participants before they answered to the questionnaire.

- Study design

- This was a cross-sectional study for validating a questionnaire.

- Setting

- The medicine program at the University of New South Wales, Sydney, Australia is 6 years in duration with approximately 280 students per year. Medical students in the second, third, fifth, and sixth years receive clinical training. Data were collected in 2018.

- Participants

- The participants were recruited from undergraduate medical students in years 2, 3, and 6 studying at the Faculty of Medicine and Health, University of New South Wales in 2018, and 834 medical students were invited to participate. The number of students who responded to the questionnaire was 352 (42.2%), among whom 157 (44.6%) were men, 183 (52.0%) were women, and 3.4% provided no data on gender. The mean age was 22.34 years (standard deviation [SD]=1.94 years). No exclusion criteria were applied.

- Data sources/measurement

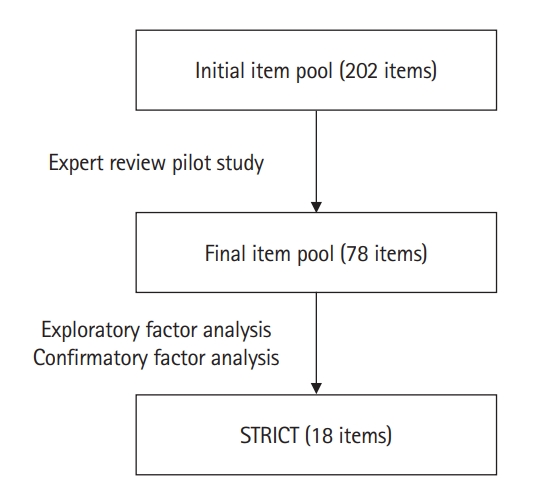

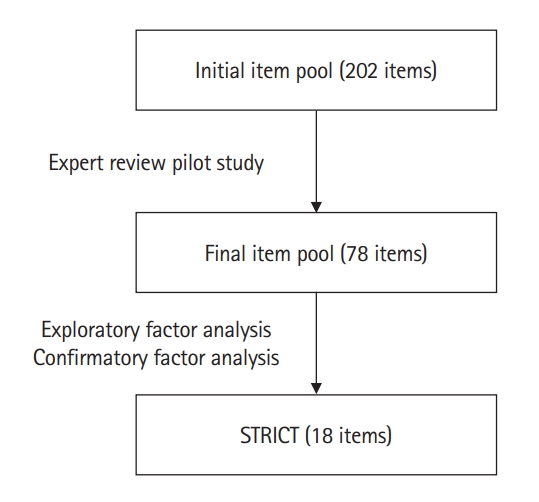

- The development of STRICT was derived from a meta-analysis of the effectiveness of clinical teaching [2] and used the 16 effective TLFs identified to construct the scale. The listed TLFs included, but were not limited to, mastery learning, concept mapping, visual-perception programs, problem-solving teaching, interactive video methods, and student-centered teaching. The authors initially generated an item pool of 202 items representing all TLFs. The item pool was then reviewed by 3 experts (1 scale expert and 2 clinicians), followed by a pilot study (with medical administrators and fifth-year medical students) to ensure the contents were appropriate and unambiguous. This reduced the number of items from 202 to 78, and 6-point frequency scales from “never” (=1) to “always” (=6) were applied (Fig. 1).

- Variables

- Demographic variables included the year of study, gender, and age. STRICT included 78 items (Supplement 1), and the latent variables were to be identified as part of the analysis.

- Bias

- Due to the nature of a validation study, response bias might have existed, yet it is expected to be minimal and not detectable.

- Study size

- The common recommended sample size is to recruit 10 participants per questionnaire item. It is also recommended that the sample size for performing exploratory factor analysis (EFA) and confirmatory factor analysis (CFA) of the questionnaire should be around 300, and the minimum size is 150 [4]. In this study, with an initial 78-item scale to be validated, a sample of 3 years of medical students (n=834) would be deemed acceptable if the response rate is >35%.

- Statistical methods

- EFA was performed using the maximum likelihood method with oblimin rotation, and a factor loading of 0.45 was set as a cut-off point for items [5]. An eigenvalue of 1 was determined as the cut-off for an adequate amount of variance explained, and a scree plot was used to justify the cut-off point. CFA with structural equation modelling was performed using AMOS 24.0 (IBM Corp.), and correlations between factors, factor loading of each item, and model fit indices (chi-square, comparative fit index [CFI], Tucker-Lewis index [TLI], root mean square error of approximation [RMSEA], and standardized root mean square residual [SRMR]) were presented.

Methods

- Participants

- Among 834 medical students invited to complete the questionnaire, 352 (44.2%) completed the questionnaire, 157 (44.6%), 183 (52%), and 8 (2.3%) were men, women, and unidentified, respectively. Of the respondents, 63 (17.6%), 134 (38.1%), and 151 (43.4%) were in years 2, 3, and 6 respectively; 4 (1.1%) did not report their year of study. The mean age was 22.34 (SD=1.84) with a range of 19–30 years.

- Main results

- After deleting the items with low factor loadings (<0.45), 18 of the 78 items remained, and 4 factors were identified through EFA. The factor loadings for each item and factor groupings are listed in Table 1, and the correlations between factors are shown in Table 2.

- The first factor included items related to feedback and linkage from knowledge to practice (student-centered learning), and the second factor related to the utilization of visual technology (visual technology). The third factor included items relating to various problem-solving techniques (problem-solving learning), and the fourth dealt with self-reflection and goal setting (self-directed learning).

- The reliability (Cronbach’s α) for each factor was 0.89, 0.85, 0.78, and 0.77, respectively; all were within the acceptable value of >0.7. In terms of factor correlation, a high positive correlation (r=0.59) was found between student-centered learning and self-directed learning, and a high negative correlation (-0.55) was found between student-centered learning and problem-solving learning. Visual technology had a low negative correlation with problem-solving learning (-0.28). Other factor correlations were within the moderate range (between |0.34| and |0.43|) (Table 2). However, the correlations are subject to change by adjusting delta in the oblimin rotation.

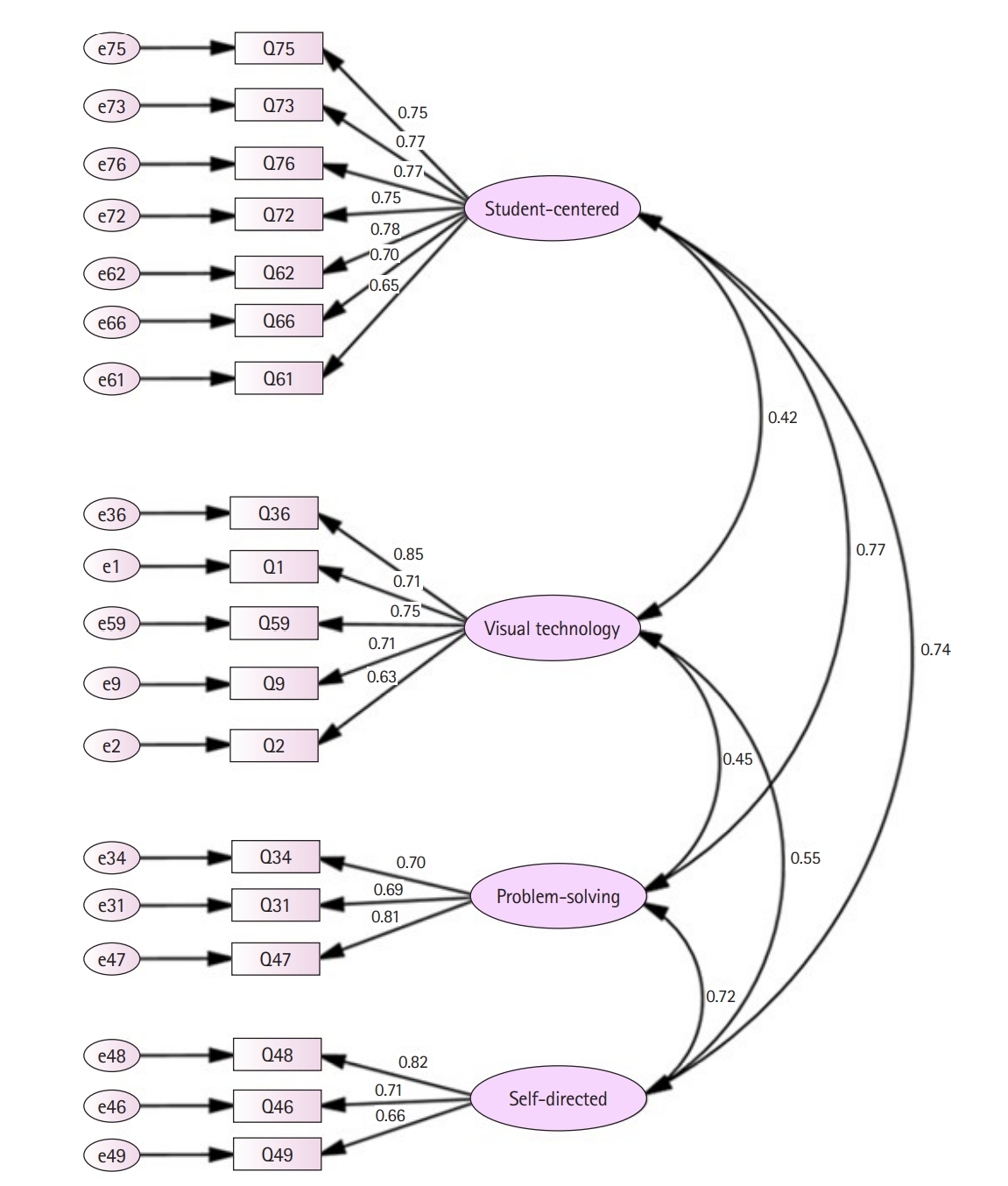

- Using a first-order model, a further check with CFA was conducted (Fig. 2). The level of goodness of fit was acceptable for the chi-square test (χ2=345.71, degrees of freedom=129, P<0.01) and the CFI, as well as the TLI (also known as non-normed fit index) were also acceptable (CFI=0.930 and TLI=0.917) [6]. The RMSEA was 0.069, and the SRMR was 0.060; both were within the acceptable range.

- The composite reliability was >0.7, which is within the acceptable to very good range (0.90 for student-centered learning, 0.85 for visual technology, 0.79 for problem-solving learning, and 0.78 for self-directed learning). It is noted however, that student-centered learning, problem-solving learning, and self-directed learning were highly correlated to each other (0.72 to 0.77), while visual technology had lower correlations with them (0.42 to 0.55) (Fig. 2). Overall, the results indicate that STRICT is a reliable measurement tool and its construct validity is supported by the statistical analysis results.

Results

Exploratory factor analysis

Confirmatory factor analysis

- Key results

- The purpose of this study was to develop and validate an effective tool to evaluate clinical teaching practices reported by students. Four teaching practices were identified: student-centered learning, visual technology, problem-solving learning, and self-directed learning.

- Interpretation

- Student-centered learning is often referred to as how students determine their learning goals and learning approaches with explicit guidance, as opposed to teacher-centered learning where teachers take control over learning goals, content, and progress. Seven items could be further converged into learning goals (no. 76, 72, 62), the teaching approach (no. 73, 66), and the peer-learning process (no. 75, 61). Student-centered learning is generally based on students’ autonomy to learn, combined with clear intended learning outcomes, supportive teaching approaches and cooperative peer learning [7]. These associations are also demonstrated in the higher correlations between the “student-centered,” “self-directed,” and “problem solving” factors (Table 2). In clinical education, students as well as practicing doctors are required to keep learning and updating their skills and knowledge, despite time-consuming clinical duties. Therefore, medical educators have advocated that learners should take responsibility for their own learning, and thus, over the past 2 decades, medical education reforms have shifted towards student-centered approaches [8]. Students are more self-motivated when the difficulties they encounter are recognized and supported by clinical teachers, and students’ stress is reduced throughout this process. Hence, medical students increasingly engage in more clinical learning, and these items could explain how they learn from peers, produce self-determined learning goals, and provide supportive and timely feedback.

- Visual technology refers to the utilization of equipment to facilitate enhanced visual perception and learning experiences. Taking the visualization of the anatomical structure as an example, students may develop better understanding of anatomical and physiological interactions, enjoy the learning process more, and learn better if the learning is presented as a visual medium [9]. Out of 5 items loading on the visual technology factor, 3 items related to how technology enhances visual perception (no. 1, 36, 59), and 2 related to the activity (no. 2, 9). To illustrate how visual technology affects clinical learning, virtual reality, for example, provides students with opportunities to practice their skills and safely bridge the gap from knowledge to bedside practice [10]. In such environments, students are allowed to learn from errors without profound negative consequences and receive self-visual feedback through digital records in relevant computer-based simulations. Furthermore, clinical students will benefit from visual technology if it requires them to identify anatomical landmarks and structures, or interpret clinical images and laboratory data. In conclusion, the use of visual technologies helps students safely practice their knowledge and skills, and the interactive interface can enhance their spatial concepts of anatomy and physiological interactions, for example, enabling them to also practice decision-making, clinical skills, and reasoning.

- Problem-solving learning focuses on students identifying, prioritizing, and solving problems with appropriate guidance and support from teachers. Three items loaded onto this factor, that is, problem identification, prioritization, and solution. In clinical settings, students practice analytical skills, such as blood test interpretation, and clinical reasoning through problem-solving learning. Although some criticism points towards the difficulty of integrating problem-solving learning in clinical settings, it is feasible to adopt this form of learning into daily tasks, such as dealing with certain disease manifestations [11]. Therefore, problem-solving learning may train students to efficiently identify problems and challenges in clinical practices and then divide the problems into manageable components; and with the aid of clinical reasoning, these approaches gradually facilitate students to learn and practice independently.

- The traits of self-directed learning can be divided into task-oriented learning, student-teacher communication, and self-reflection. Three items loaded on self-directed learning and represent these 3 traits (no. 49 for task-oriented learning, no. 46 for student-teacher communication, and no. 48 for self-reflection). In clinical settings, students often learn by performing new tasks when they meet new problems. Therefore, this learn-from-tasks model is adapted to task-based learning, a component of problem-solving learning [12]. In addition, self-directed learning can be performed by a small group of students wishing to achieve certain goals or complete assessments, tasks and projects, and students in the same clinical attachment can learn independently whilst peer-teaching and cooperating; and this approach may result in them developing greater confidence as well as psychomotor and cognitive skills [13]. Moreover, it is important for clinical students to rethink what they have learnt and recognize what they still do not know, and how to improve and fill in knowledge and skills gaps. This process of reflection is referred to as using meta-cognitive skills and is often used in clinical reasoning. For example, Gibbs’ model of self-reflection explains how students make action plans based on reflecting upon past experiences, and students gradually improve their interviewing skills via this approach [14]. In conclusion, self-directed learning is closely related to problem-solving learning, small group learning and meta-cognitive strategies. However, the usefulness of these traits relies on how actively students initiate their learning. Students must take responsibility to set and meet their own goals and undertake frequent self-reflection. Therefore, these items reflect how students direct their learning and monitor their own progress; hence, they fit the term “self-directed learning” well.

- STRICT’s main strengths are: (1) it is based on observation more than judgement which is preferable, particularly when used by non-experts; (2) it focuses on domains that are found most relevant for the quality of teaching [2]. STRICT’s main weakness is having only 3 items in 2 domains. Although acceptable, further research should aim to improve the STRICT tool by adding more items.

- Comparison with previous studies

- In comparison to previous tools used to evaluate clinical teaching, STRICT successfully addresses some critical shortcomings of commonly used tools such as the Dundee Ready Education Environment Measure and Maastricht Clinical Teaching Questionnaire [15]. In particular, STRICT’s items were developed from robust meta-analysis that identified effective clinical teaching practices; the response anchors used a 6 point frequency scale, which is less vulnerable to bias; and the sample size used was diverse and large enough to include students from the first to final years in the medicine program. The findings of good reliability measures and model fit support the validity and robustness of STRICT.

- Limitations

- A limitation of the study was that the sample size was not large enough to consider different teaching and learning conditions in different specialties. The student samples were collected from 11 hospitals and across more than 20 specialities; it was difficult, however, to undertake further analysis to include impacts of the various sites and specialities. Another limitation was that individual differences among students were not considered.

- Generalizability

- The diversity of student experience, clinical setting, personal/demographic backgrounds, and the nature of the items that capture observable clinical teaching practices (rather than personal judgment) alongside the strong support of its validity as the psychometric indices suggest that STRICT is most likely to be a useful evaluation tool for clinical teaching globally. Nonetheless, further research is required to establish this by using additional empirical results from well-designed studies undertaken within diverse contexts.

- Suggestions

- Future studies should look at the impact of individual differences across respondents and contexts. Validity and reliability should be checked across different countries and settings to understand its generalizability.

- Conclusion

- STRICT is a valid and reliable tool that demonstrates how students perceive clinical teaching efficacy. A major difference of STRICT is that it measures the frequency of teaching practices rather than students’ judgement of them, and as such, it might mitigate the biases of acquiescence and social desirability. Based on these findings, clinical teachers might adapt their teaching practices to include more active learning activities and utilize visual technology to facilitate clinical learning efficacy. Clinical educators may apply STRICT to assess how these teaching practices are implemented in current clinical settings.

Discussion

-

Authors’ contributions

Conceptualization: PHH, AJO, BS. Data curation: PHH. Methodology/formal analysis/validation: PHH, AJO, BS. Project administration: BS. Funding acquisition: PHH. Writing–original draft: PHH. Writing–review & editing: PHH, AJO, BS.

-

Conflict of interest

Boaz Shulruf has been an associate editor of the Journal of Educational Evaluation for Health Professions since 2017, but had no role in the decision to publish this review. No other potential conflict of interest relevant to this article was reported.

-

Funding

This study was supported by a scholarship from Taipei Veterans General Hospital-National Yang-Ming University Excellent Physician Scientists Cultivation Program (105-Y-A-005; to P.-H H between 2017 and 2020). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

-

Data availability

Data files are available from Harvard Dataverse: https://doi.org/10.7910/DVN/Y1VZUK

Dataset 1. The data file contains the currently-used guidelines for manuscript preparation.

Article information

Acknowledgments

Supplementary materials

- 1. Eggleton K, Goodyear-Smith F, Henning M, Jones R, Shulruf B. A psychometric evaluation of the University of Auckland General Practice Report of Educational Environment: UAGREE. Educ Prim Care 2017;28:86-93. https://doi.org/10.1080/14739879.2016.1268934 ArticlePubMed

- 2. Huang PH, Haywood M, O’Sullivan A, Shulruf B. A meta-analysis for comparing effective teaching in clinical education. Med Teach 2019;41:1129-1142. https://doi.org/10.1080/0142159X.2019.1623386 ArticlePubMed

- 3. Brown G, Shulruf B. Response option design in surveys. In: Ford LR, Scandura TA, editors. The SAGE handbook of survey development and application. Sage Publications; 2023. p. 120-131.

- 4. Kyriazos TA. Applied psychometrics: sample size and sample power considerations in factor analysis (EFA, CFA) and SEM in general. Psychology 2018;9:2207-2230. https://doi.org/10.4236/psych.2018.98126 Article

- 5. Kang Y, McNeish DM, Hancock GR. The role of measurement quality on practical guidelines for assessing measurement and structural invariance. Educ Psychol Meas 2016;76:533-561. https://doi.org/10.1177/0013164415603764 ArticlePubMed

- 6. Byrne BM. Structural equation modeling with AMOS: basic concepts, applications, and programming. 3rd ed. Routledge; 2016. p. 460 p.

- 7. McCabe A, O’Connor U. Student-centred learning: the role and responsibility of the lecturer. Teach High Educ 2014;19:350-359. https://doi.org/10.1080/13562517.2013.860111 Article

- 8. Mehta NB, Hull AL, Young JB, Stoller JK. Just imagine: new paradigms for medical education. Acad Med 2013;88:1418-1423. https://doi.org/10.1097/ACM.0b013e3182a36a07 ArticlePubMed

- 9. Wang M, Wu B, Chen NS, Spector JM. Connecting problem-solving and knowledge-construction processes in a visualization-based learning environment. Comput Educ 2013;68:293-306. https://doi.org/10.1016/j.compedu.2013.05.004 Article

- 10. Weller J, Henderson R, Webster CS, Shulruf B, Torrie J, Davies E, Henderson K, Frampton C, Merry AF. Building the evidence on simulation validity: comparison of anesthesiologists’ communication patterns in real and simulated cases. Anesthesiology 2014;120:142-148. https://doi.org/10.1097/ALN.0b013e3182a44bc5 ArticlePubMed

- 11. Burn K, Mutton T. A review of ‘research-informed clinical practice’ in initial teacher education. Oxf Rev Educ 2015;41:217-233. https://doi.org/10.1080/03054985.2015.1020104 Article

- 12. Ge X, Planas LG, Huang K. Guest editors’ introduction: special issue on problem-based learning in health professions education/toward advancement of problem-based learning research and practice in health professions education: motivating learners, facilitating processes, and supporting with technology. Interdiscip J Probl Based Learn 2015;9:5. https://doi.org/10.7771/1541-5015.1550 Article

- 13. Carr SE, Brand G, Wei L, Wright H, Nicol P, Metcalfe H, Saunders J, Payne J, Seubert L, Foley L. “Helping someone with a skill sharpens it in your own mind”: a mixed method study exploring health professions students experiences of Peer Assisted Learning (PAL). BMC Med Educ 2016;16:48. https://doi.org/10.1186/s12909-016-0566-8 ArticlePubMedPMC

- 14. Akhigbe T, Monday E. Reflection and reflective practice in general practice: a systematic review exploring and evaluating key variables influencing reflective practice. J Adv Med Med Res 2022;34:34-44. https://doi.org/10.9734/jammr/2022/v34i331271 Article

- 15. Rodino AM, Wolcott MD. Assessing preceptor use of cognitive apprenticeship: is the Maastricht Clinical Teaching Questionnaire (MCTQ) a useful approach? Teach Learn Med 2019;31:506-518. https://doi.org/10.1080/10401334.2019.1604356 ArticlePubMed

References

Figure & Data

References

Citations

- Figure

- We recommend

- Related articles

-

- Development and psychometric evaluation of a 360-degree evaluation instrument to assess medical students’ performance in clinical settings at the emergency medicine department in Iran: a methodological study

- Development and validity evidence for the resident-led large group teaching assessment instrument in the United States: a methodological study

KHPLEI

KHPLEI

PubReader

PubReader ePub Link

ePub Link Cite

Cite