Articles

- Page Path

- HOME > J Educ Eval Health Prof > Volume 16; 2019 > Article

-

Brief report

No observed effect of a student-led mock objective structured clinical examination on subsequent performance scores in medical students in Canada -

Lorenzo Madrazo1

, Claire Bo Lee2

, Claire Bo Lee2 , Meghan McConnell1,3

, Meghan McConnell1,3 , Karima Khamisa1*

, Karima Khamisa1* , Debra Pugh1,4,5

, Debra Pugh1,4,5

-

DOI: https://doi.org/10.3352/jeehp.2019.16.14

Published online: May 27, 2019

1Faculty of Medicine, University of Ottawa, Ottawa, ON, Canada

2Department of Medicine, McGill University, Montreal, QC, Canada

3Department of Anesthesiology and Pain Medicine, University of Ottawa, Ottawa, ON, Canada

4Department of Medicine, University of Ottawa, Ottawa, ON, Canada

5Medical Council of Canada, Ottawa, ON, Canada

- *Corresponding email: kkhamisa@toh.ca

© 2019, Korea Health Personnel Licensing Examination Institute

This is an open-access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

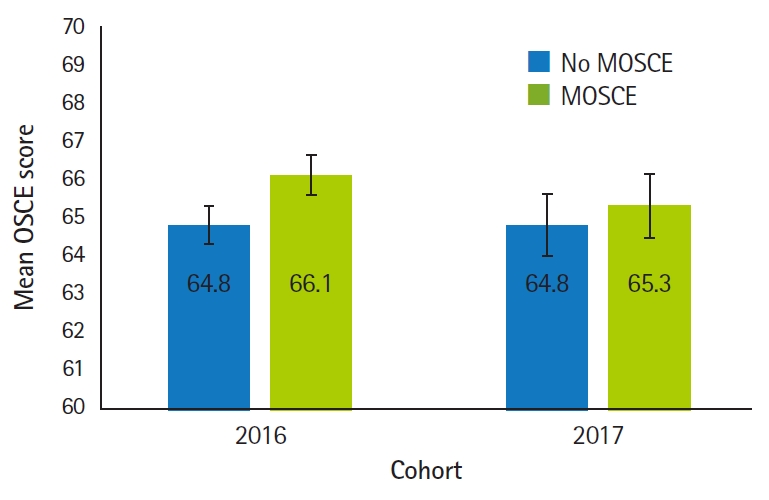

- Student-led peer-assisted mock objective structured clinical examinations (MOSCEs) have been used in various settings to help students prepare for subsequent higher-stakes, faculty-run OSCEs. MOSCE participants generally valued feedback from peers and reported benefits to learning. Our study investigated whether participation in a peer-assisted MOSCE affected subsequent OSCE performance. To determine whether mean OSCE scores differed depending on whether medical students participated in the MOSCE, we conducted a between-subjects analysis of variance, with cohort (2016 vs. 2017) and MOSCE participation (MOSCE vs. no MOSCE) as independent variables and the mean OSCE score as the dependent variable. Participation in the MOSCE had no influence on mean OSCE scores (P=0.19). There was a significant correlation between mean MOSCE scores and mean OSCE scores (Pearson r=0.52, P<0.001). Although previous studies described self-reported benefits from participation in student-led MOSCEs, it was not associated with objective benefits in this study.

-

Authors’ contributions

Conceptualization: LM, CBL, MM, KK, DP. Data curation: DP. Formal analysis: DP, MM. Funding acquisition: KK. Methodology: LM, CBL, DP. Project administration: KK. Visualization: None. Writing–original draft: LM. Writing–review & editing: LM, CBL, MM, KK, DP.

-

Conflict of interest

Dr. Khamisa is a speaker for Amgen and Novartis Canada. The authors alone are responsible for the content and writing of this article. No potential conflict of interest relevant to this article was reported.

-

Funding

This study was funded by a grant from the Ottawa Blood Diseases Centre (OHCO 973 107 000), the Ottawa Hospital. The funding source had no role in data collection, analysis, or the preparation of this manuscript.

Article information

Acknowledgments

Supplementary material

| Variable | Mean M2 OSCE score±SD | t-value (df) | P-value |

|---|---|---|---|

| 2016 M3 MOSCE | 1.500 (161) | 0.136 | |

| Participants (n=43) | 78.5±3.7a) | ||

| Non-participants (n=120) | 77.4±4.1a) | ||

| 2017 M3 MOSCE | 1.174 (161) | 0.242 | |

| Participants (n=45) | 75.4±4.8b) | ||

| Non-participants (n=118) | 74.5±4.1b) |

- 1. Slomer A, Chenkin J. Does test-enhanced learning improve success rates of ultrasound-guided peripheral intravenous insertion?: a randomized controlled trial. AEM Educ Train 2017;1:310-315. https://doi.org/10.1002/aet2.10044 ArticlePubMedPMC

- 2. Pugh D, Desjardins I, Eva K. How do formative objective structured clinical examinations drive learning?: analysis of residents’ perceptions. Med Teach 2018;40:45-52. https://doi.org/10.1080/0142159X.2017.1388502 ArticlePubMed

- 3. Khan R, Payne MW, Chahine S. Peer assessment in the objective structured clinical examination: a scoping review. Med Teach 2017;39:745-756. https://doi.org/10.1080/0142159X.2017.1309375 ArticlePubMed

- 4. Lee CB, Madrazo L, Khan U, Thangarasa T, McConnell M, Khamisa K. A student-initiated objective structured clinical examination as a sustainable cost-effective learning experience. Med Educ Online 2018;23:1440111. https://doi.org/10.1080/10872981.2018.1440111 ArticlePubMedPMC

- 5. Khamisa K, Halman S, Desjardins I, Jean MS, Pugh D. The implementation and evaluation of an e-Learning training module for objective structured clinical examination raters in Canada. J Educ Eval Health Prof 2018;15:18. https://doi.org/10.3352/jeehp.2018.15.18 ArticlePubMedPMCPDF

- 6. Medical Council of Canada. Medical Council of Canada Qualifying Examination part II [Internet]. Ottawa (ON): Medical Council of Canada; 2018 [cited 2018 Jun 8]. Available from:https://mcc.ca/examinations/mccqe-part-ii/

- 7. Humphrey-Murto S, Mihok M, Pugh D, Touchie C, Halman S, Wood TJ. Feedback in the OSCE: what do residents remember? Teach Learn Med 2016;28:52-60. https://doi.org/10.1080/10401334.2015.1107487 ArticlePubMed

References

Appendix

Appendix 1.

| Variable | Mean second-year OSCE score±SD | t-value (df) | P-value |

|---|---|---|---|

| 2016 Third-year MOSCE | 1.500 (161) | 0.136 | |

| Participants (n=43) | 78.5±3.7a) | ||

| Non-participants (n=120) | 77.4±4.1a) | ||

| 2017 Third-year MOSCE | 1.174 (161) | 0.242 | |

| Participants (n=45) | 75.4±4.8b) | ||

| Non-participants (n=118) | 74.5±4.1b) |

The independent-samples t-test was used, and 2-tailed P-values were calculated. For the 2016 third-year OSCE (n=172), the median was 65.5 (mean=65.1) and for the 2017 third-year OSCE (n=160), the median was 64.8 (mean=64.9). Using a median split, students were grouped as either high- or low-performing. The chi-square test for independence was performed and no relationship was found between performance (high or low) on the OSCE and participation in the MOSCE as either an examinee or standardized patient (see Tables 2–4).

OSCE, objective structural clinical examination; MOSCE, mock OSCE; SD, standard deviation; df, degrees of freedom.

a) Second-year OSCE score 2015.

b) Second-year OSCE score 2016.

| Participation as mock OSCE 2016 examinee |

Third-year OSCE 2016 |

Total | |

|---|---|---|---|

| Low performers | High performers | ||

| Yes | 20 | 26 | 46 |

| No | 58 | 57 | 115 |

| Total | 78 | 83 | 161 |

| Participation as mock OSCE 2017 examinee |

Third-year OSCE 2017 |

Total | |

|---|---|---|---|

| Low performers | High performers | ||

| Yes | 22 | 22 | 44 |

| No | 54 | 57 | 111 |

| Total | 76 | 79 | 155 |

| Participation in mock OSCE 2017 as SP | Second-year OSCE 2017 | Total | |

|---|---|---|---|

| Low performers | High performers | ||

| Yes | 10 | 5 | 15 |

| No | 66 | 80 | 146 |

| Total | 76 | 85 | 161 |

χ2 = (1, N=161), P=0.113. The correlation between 2016 mock OSCE scores and subsequent scores on a third-year OSCE were high (r=0.490, P=0.001). Similarly, the 2017 mock OSCE and subsequent third-year OSCE scores showed a high correlation (r=0.619, P<0.001). However, the correlation between the second-year and third-year OSCEs was also high (r=0.560, P<0.001; r=0.510, P<0.001).

OSCE, objective structured clinical examination; SP, standardized patient.

Figure & Data

References

Citations

- Impact of familiarity with the format of the exam on performance in the OSCE of undergraduate medical students – an interventional study

Hannes Neuwirt, Iris E. Eder, Philipp Gauckler, Lena Horvath, Stefan Koeck, Maria Noflatscher, Benedikt Schaefer, Anja Simeon, Verena Petzer, Wolfgang M. Prodinger, Christoph Berendonk

BMC Medical Education.2024;[Epub] CrossRef - Perceived and actual value of Student‐led Objective Structured Clinical Examinations

Brandon Stretton, Adam Montagu, Aline Kunnel, Jenni Louise, Nathan Behrendt, Joshua Kovoor, Stephen Bacchi, Josephine Thomas, Ellen Davies

The Clinical Teacher.2024;[Epub] CrossRef - Benefits of semiology taught using near-peer tutoring are sustainable

Benjamin Gripay, Thomas André, Marie De Laval, Brice Peneau, Alexandre Secourgeon, Nicolas Lerolle, Cédric Annweiler, Grégoire Justeau, Laurent Connan, Ludovic Martin, Loïc Bière

BMC Medical Education.2022;[Epub] CrossRef - Identification des facteurs associés à la réussite aux examens cliniques objectifs et structurés dans la faculté de médecine de Rouen

M. Leclercq, M. Vannier, Y. Benhamou, A. Liard, V. Gilard, I. Auquit-Auckbur, H. Levesque, L. Sibert, P. Schneider

La Revue de Médecine Interne.2022; 43(5): 278. CrossRef - Evaluation of the Experience of Peer-led Mock Objective Structured Practical Examination for First- and Second-year Medical Students

Faisal Alsaif, Lamia Alkuwaiz, Mohammed Alhumud, Reem Idris, Lina Neel, Mansour Aljabry, Mona Soliman

Advances in Medical Education and Practice.2022; Volume 13: 987. CrossRef - The use of a formative OSCE to prepare emergency medicine residents for summative OSCEs: a mixed-methods cohort study

Magdalene Hui Min Lee, Dong Haur Phua, Kenneth Wei Jian Heng

International Journal of Emergency Medicine.2021;[Epub] CrossRef - Tutor–Student Partnership in Practice OSCE to Enhance Medical Education

Eve Cosker, Valentin Favier, Patrice Gallet, Francis Raphael, Emmanuelle Moussier, Louise Tyvaert, Marc Braun, Eva Feigerlova

Medical Science Educator.2021; 31(6): 1803. CrossRef - Peers as OSCE assessors for junior medical students – a review of routine use: a mixed methods study

Simon Schwill, Johanna Fahrbach-Veeser, Andreas Moeltner, Christiane Eicher, Sonia Kurczyk, David Pfisterer, Joachim Szecsenyi, Svetla Loukanova

BMC Medical Education.2020;[Epub] CrossRef

- Figure

- We recommend

- Related articles

-

- Experience of introducing an electronic health records station in an objective structured clinical examination to evaluate medical students’ communication skills in Canada: a descriptive study

- Students’ performance of and perspective on an objective structured practical examination for the assessment of preclinical and practical skills in biomedical laboratory science students in Sweden: a 5-year longitudinal study

- Acceptability of the 8-case objective structured clinical examination of medical students in Korea using generalizability theory: a reliability study

KHPLEI

KHPLEI

PubReader

PubReader ePub Link

ePub Link Cite

Cite